AI-enabled

Search

smarter searching through

intellectual property

| Project Type | Rapid Prototyping, Agile, User Testing |

|---|

| Role | Experience Design Lead | Elise Ansher |

|---|---|---|

| Senior Experience Designer | Kevin Choi | |

| Service Design Lead | Michael Buquet | |

| Technical Lead | Michael Ramos | |

| Length | 1 year |

AI-Enabled Search (AES) helps researchers identify and search through relevant documents more confidently and efficiently.

I designed and built AES with Google data scientists and a cross-functional development team to improve search at a federal agency that regulates intellectual property.

Project brief and background.

To best address our users’ needs, we had to balance our AI model’s capabilities with the existing agency search experience.

We decided to build our prototype as a Chrome plugin that enhances the existing search tool for a few reasons:

Agency and researcher union policy stipulates what digital tools researchers can use.

Working within a contained environment allowed us to deploy and test AES with no impact to agency search development and researchers' day-to-day.

A user story prioritization exercise

We prioritized three areas of exploration – Similarity, Expand, and Coverage – based on data model limitations and impact to the existing search experience.

Working in two-week sprint cycles, we followed agile development procedures to design, build, implement, and test the AI Enabled Search MVP.

User research sessions.

Each sprint, I facilitated feedback sessions, ensuring that all designs were co-created and validated with end-users from concept to code.

Depending on our research goals, I hosted different types of sessions:

Detailee Focus Group

I met with a group of researchers once a sprint to brainstorm, iterate on, and discuss AES vision and features.Researcher Engagement

I met with researchers one-on-one to validate user flows and gather targeted feedback.User Testing

I met with researchers one-on-one to test the implemented prototype & collect click data.

Over the first six months of the project, we explored how users could interact with our three flagship AI models.

In the next six months, we worked with end-users to refine our initial features across three key themes – clarity, exploration, and harmony.

Clarity.

Researchers prefer to spend their time reading documents rather than searching for them. They need concise copy that supports an intuitive user experience as they search for relevant references.

USER FEEDBACK QUOTES

- Is there a tool tip? It may be clear when I click but I don’t know without more."

- I’m getting the idea of what other areas I should look at to search in."

- I wouldn't know what to expect this [Add Results] button to do at first glance."

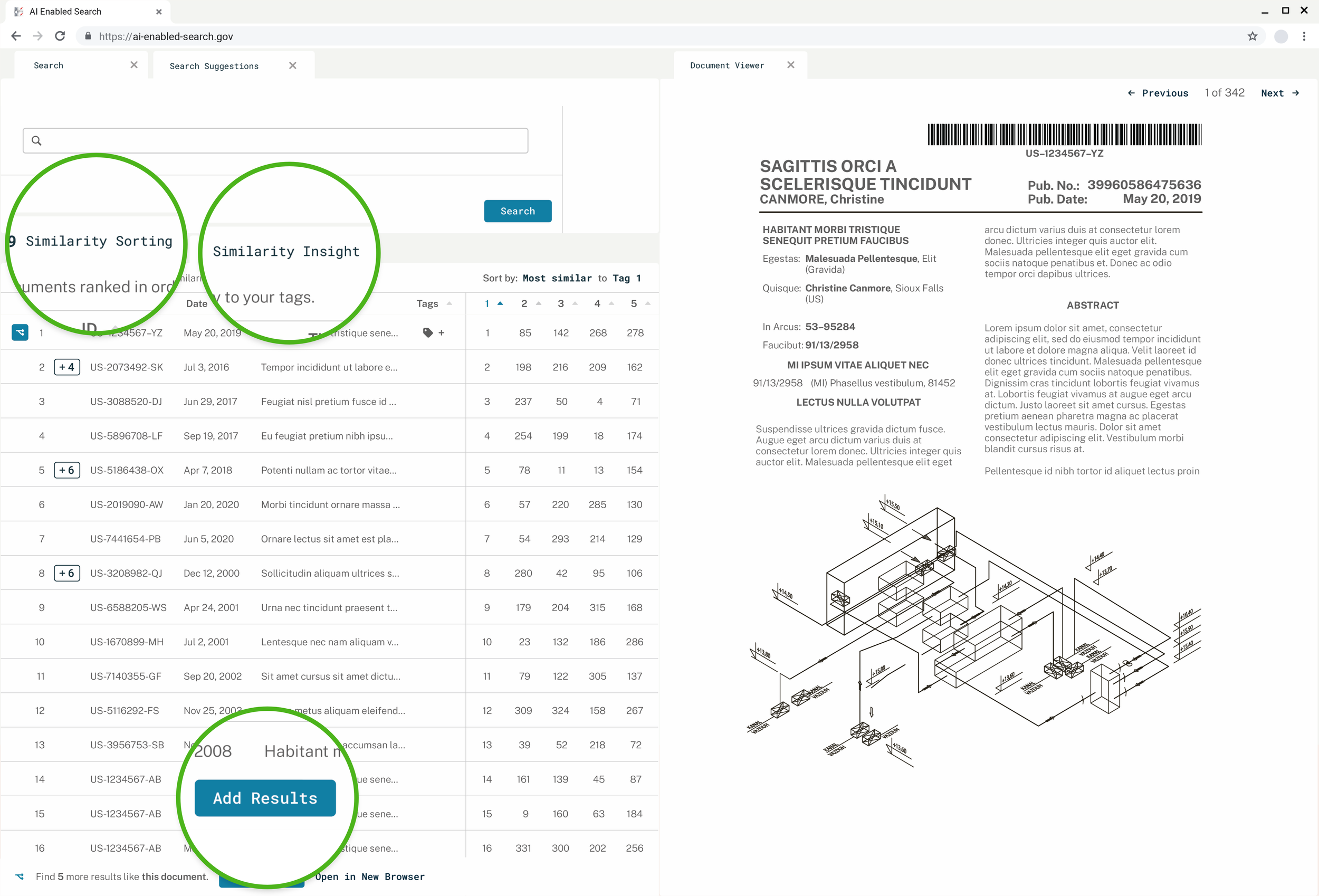

Feature Labels

First and foremost, users need to understand what a tool can do.

-

Before: Users could not easily intuit what features did from their names.

-

After: I renamed our features to better reflect their intended use.

Working with our Detailee Focus Group over several sprints, I relabeled our features with action-oriented names, meant to reinforce each tool’s primary function.

Context Clues

I also explored how to explain complex AI functionality in plain language.

-

Before: Users found the contextual information difficult to find on the page.

-

After: I simplified the copy making it easier to read.

Strategically placed context clues help reduce the learning curve, lowering the barrier for users to understand and get to know the AI features.

Exploration.

When searching for a good reference, researchers rely on their subject-area knowledge and their prior experience. Researchers expect AI to help them browse through documents both more confidently and more efficiently.

USER FEEDBACK QUOTES

- Knowing my area, I would rather look at more documents than fewer."

- What about this document makes me want to stop and read?"

- Seeing what’s similar to what I’ve tagged – now that’s not that bad of an idea!"

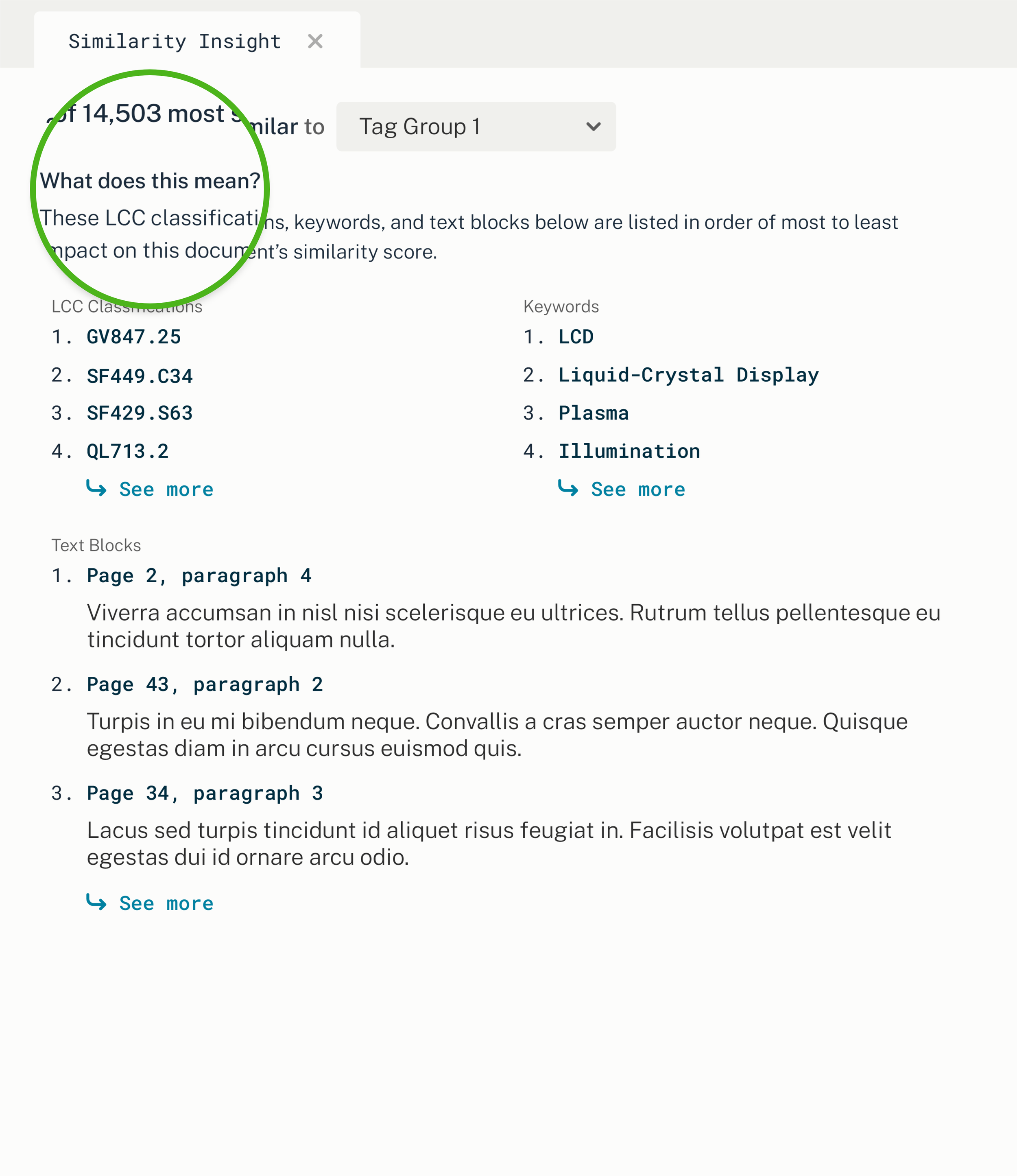

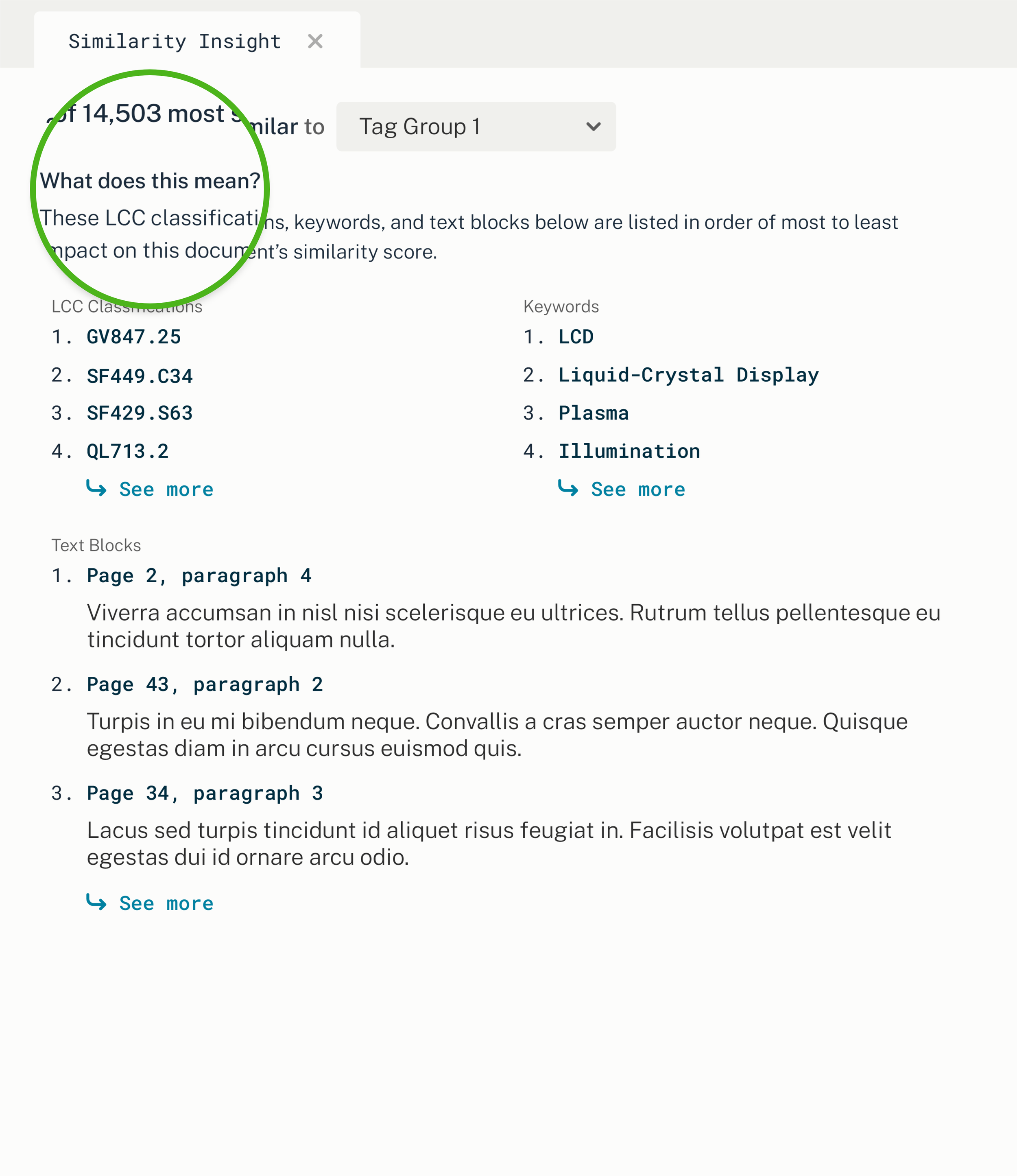

Explainable AI

We introduced and tested a new feature, Similarity Insight, designed to make the similarity rank data more accessible.

-

Before: Users were very eager to understand what similarity meant to our data model.

-

Testing: I tested many different layouts to maximize readability.

-

After: Ultimately, users preferred a stripped-down, scannable version.

Users had trouble understanding what similarity between references represents, even though they agreed that similarity was a valuable data point.

Similarity Insight allows a user to see how the AI Model calculated a document’s score, showing the relevant data listed in order of relevance.

In-Line Buttons

Researchers know a good reference when they see one and want AI to help them find more sources at the click of a mouse.

-

Before: Users could not easily find the Add Results (formerly "Expand") in a pop-over.

-

After: Users easily discovered Add Results with an in-line button and tool tip.

We adapted Add Results (formerly ‘Expand Search’) from a clunkier pop-over interaction to an in-line experience, linking users to a favorite feature with less friction.

Harmony.

Researchers want the AES plugin to connect with agency tool features in order to provide more ways to learn about the quality of their search. Researchers also have an established way of doing things and expect AI to work in a familiar way.

USER FEEDBACK QUOTES

- Can I see what the model picks out as important about my own tag groups?"

- Can I compare the similarity of the results of each of my queries instead of tag groups?"

- I want easily to add these classification suggestions to search –like Build Query."

Nested Tables

Researchers are used to navigating nested tables in the agency search tool.

-

Before: Researchers use nested tables to hide duplicate documents in the agency search tool.

-

After: Users recognized our Add Results nested tables from the familiar UI pattern.

Nested Tables save users browsing time while modeling how to insert more documents to an existing list.

I designed Add Results using the familiar UI pattern to bring examiners additional documents in context.

Tagging Documents

Our Similarity Sorting feature competes for on-screen real estate with the agency search tool’s tagging UI pattern.

-

Before: The agency search tool uses checkbox columns to add documents to tag groups.

-

Testing: Users were confused between similarity score and adding tags.

-

After: Users liked adding tags in a pop-over interaction better than the checkbox columns.

I designed an alternate tagging pattern to maximize the usability of tagging within our Similarity Sorting table.

Impact.

Having just wrapped our 46th sprint cycle, the team is continuing to design, build, and test our four (soon to be five!) plug-in features.

AES features embedded in the researcher user flow

So far, 800 users have access to the AES prototype, 40% of whom use our AI-Enabled search tools every day.